15 Easy Ways to Improve Your Email Open Rate

Global Hemp Industry Database and CBD Shops B2B Business Data List with Emails https://t.co/nqcFYYyoWl pic.twitter.com/APybGxN9QC

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Content

Location focusing on is your only option to access location-particular prices on Amazon. To do this, you need a backconnect node with location targeting. When you access this node, you get a new rotating IP with every connection.

Aside from product knowledge, you may also use it for extracting information, article, images, and discussions on forums. Before using it, you'll be able to even check it with out signing as much as confirm if it is going to be useful on the site you propose to apply it to. With simply an API call, you may get all of the publicly available data about a specified product on Amazon. But for pages that show even with out JavaScript enabled, you can use the duo of Requests and BeautifulSoup.

Vitamins and Supplements Manufacturer, Wholesaler and Retailer B2B Marketing Datahttps://t.co/gfsBZQIQbX

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

This B2B database contains business contact details of practically all vitamins and food supplements manufacturers, wholesalers and retailers in the world. pic.twitter.com/FB3af8n0jy

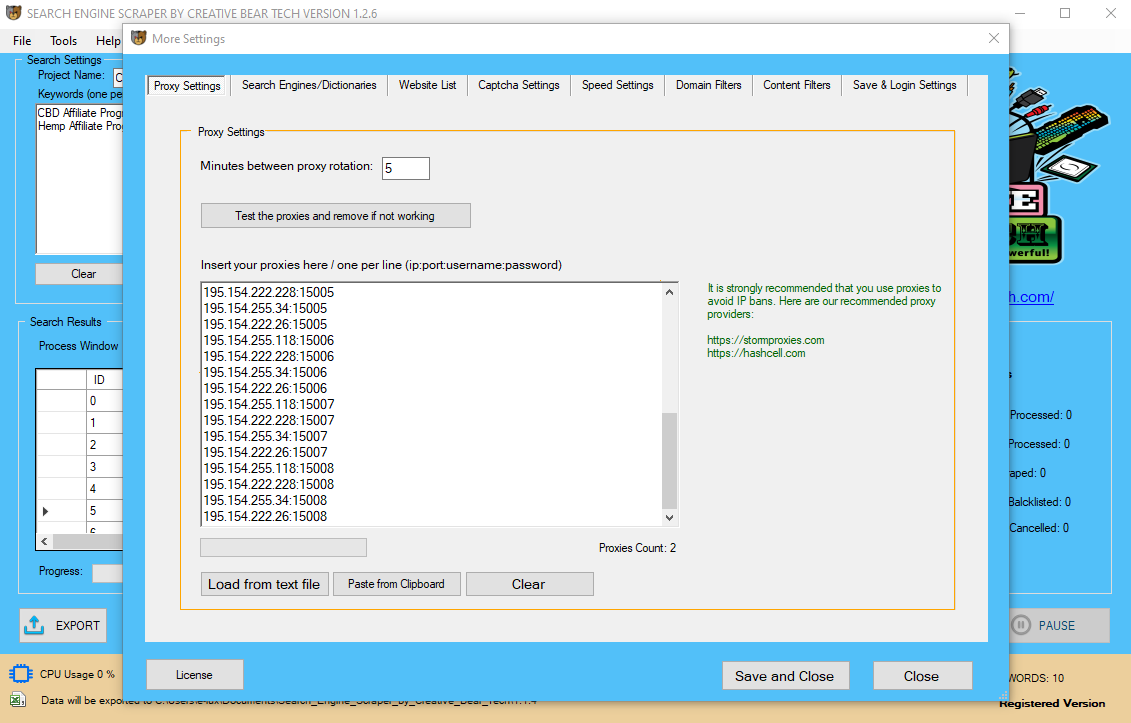

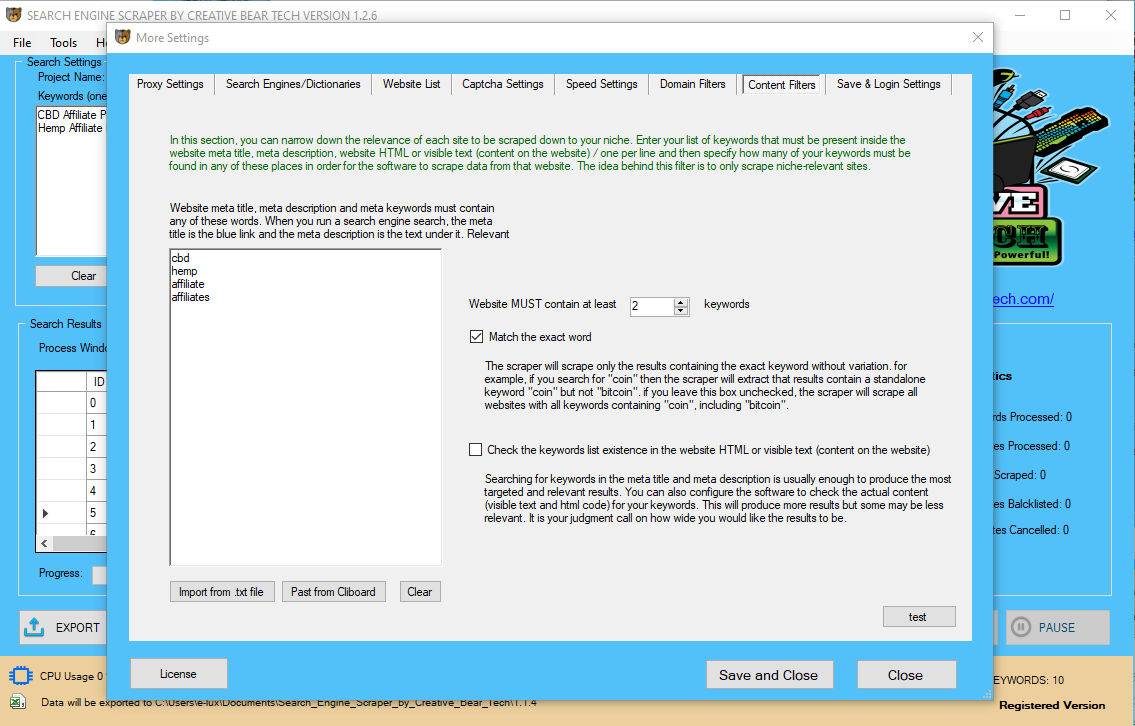

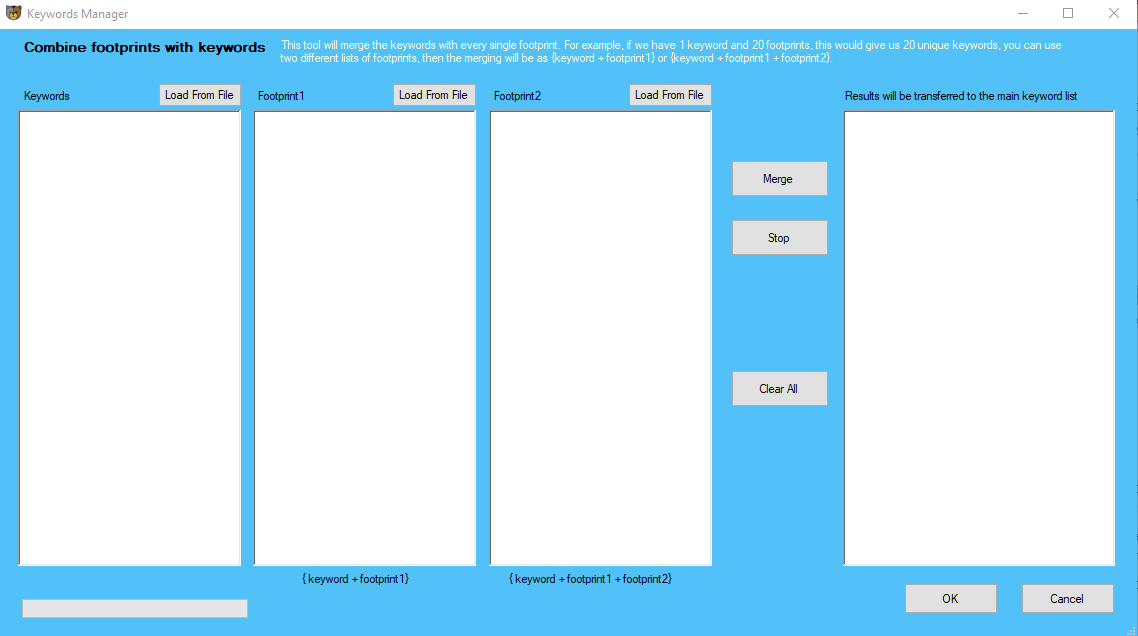

ParseHub identical to all of the above net scrapers is a visual net scraping device. Unlike the above, its desktop software comes free but with some limitations that might not be necessary to you. ParseHub is extremely versatile and highly effective. IP rotation is essential in net scraping and when using the desktop application, you have to take care of setting proxies your self. Helium Scraper is a desktop app you need to use for scraping LinkedIn information. Aside from evaluate information and product data, information on prime rated merchandise and their ranking can be utilized for detecting modifications within the recognition of merchandise. In truth, there’s much more you can do with information on Amazon if you can get your palms on them. To facilitate your access to this data, Amazon offers an API. But this API is simply too restrictive and comes with a lot of limitations that make them not helpful in most use cases. This can be a lot of work to do, and as such, it is advisable to make use of Selenium. If you browse the customer evaluate web page, you'll observe completely different layouts and the way layouts change; sometimes, between pages – that is all in a bid to prevent scraping.  Some of the most well-liked ones are Chrome browser extensions like Web Scraper, Data Scraper, and Scraper. Other applications that permit scraping Amazon are Scrapy, ParseHub, OutWitHub, FMiner, Octoparse, and Web Harvey. Dedicated datacenter proxies are both cheaper and quicker. Amazon will shortly notice such proxies and blocked them or feed you false information. The above is a listing of the 5 greatest Amazon scrapers out there you should use. ScrapeStorm supports an excellent variety of working techniques and also has a cloud-primarily based solution perfect for scheduling internet scraping tasks. ParseHub is a free and highly effective web scraping device. With our superior web scraper, extracting information is as straightforward as clicking on the data you need. There are at least a dozen applications for automated Amazon scraping. The online retail giant’s system can also be very vigilant to outright ban any guests that try scraping methods. This is why you want an Amazon proxy server to scrape it efficiently. More complicated instructions such as relative select and the command to scrape all pages could be carried out on display as nicely (although it’s onerous to name them more difficult). Relative choose implies telling ParseHub to collect knowledge that is associated to your primary selection. In the occasion of flight costs, the relative selection could possibly be destinations or flight companies.

Some of the most well-liked ones are Chrome browser extensions like Web Scraper, Data Scraper, and Scraper. Other applications that permit scraping Amazon are Scrapy, ParseHub, OutWitHub, FMiner, Octoparse, and Web Harvey. Dedicated datacenter proxies are both cheaper and quicker. Amazon will shortly notice such proxies and blocked them or feed you false information. The above is a listing of the 5 greatest Amazon scrapers out there you should use. ScrapeStorm supports an excellent variety of working techniques and also has a cloud-primarily based solution perfect for scheduling internet scraping tasks. ParseHub is a free and highly effective web scraping device. With our superior web scraper, extracting information is as straightforward as clicking on the data you need. There are at least a dozen applications for automated Amazon scraping. The online retail giant’s system can also be very vigilant to outright ban any guests that try scraping methods. This is why you want an Amazon proxy server to scrape it efficiently. More complicated instructions such as relative select and the command to scrape all pages could be carried out on display as nicely (although it’s onerous to name them more difficult). Relative choose implies telling ParseHub to collect knowledge that is associated to your primary selection. In the occasion of flight costs, the relative selection could possibly be destinations or flight companies.

Search For Products In three Categories On Amazon

Parsehub is a visual data scraping and extraction device that can be utilized to get information from the target website. User doesn't need to code net scraper and may simply generate APIs from web sites which are required for scrape. Parsehub offers each free and custom enterprise plans for enormous information extraction. What then do you do as a marketer or researcher fascinated in the wealth of data out there on Amazon? The solely Best Google Maps Data Scraping software option left to you is to scrape and extract the data you require from Amazon internet pages.  For knowledge that the automated identification system does not work for, you can make use of the point and click on interface. ScrapeStorm was constructed by an ex-Google crawler staff. It helps a number of data export method and makes the whole process of scraping LinkedIn easy. If that’s not sufficient, the person can take a look at paid plans for data scraping. ParseHub will make the relative selection on some pages from every name to one value. To repair this problem, simply click on on the name of thesecondproduct and the worth toguide ParseHubto perceive the data you should extract or scrape. Use Parsehub if you wish to scrape something like Amazon, Etsy, H&M, or any other online commercial retailer. If you know you aren't an skilled bot developer, you would possibly as nicely make use of one of many already-made LinkedIn scrapers mentioned below this part. However, in case you are able to take the problem, then you definitely may give it a attempt to see how simple/troublesome it's to bypass LinkedIn anti-bot checks. Once you've got built the scraping model to your specs, click the 'get knowledge' button on the bottom left of the principle command screen. Well, let me rephrase it, scraping LinkedIn is extremely onerous and even with the slightest mistake, you will be sniffed out and blocked in no time. This is as a result of LinkedIn has a really sensible system in place to detect and deny bot site visitors. You can scrape something from user profile information to business profiles, and job posting associated knowledge. With Helium Scraper extracting data from LinkedIn becomes simple – because of its intuitive interface. However, make sure it sends with your requests the required headers similar to User-Agent, Accept, Accept-Encoding, Accept-Language, and so on. Without sending headers of popular web browsers, Amazon will deny you entry – an indication you've been fished out as a bot. ParseHub is an intuitive and easy to study knowledge scraping tool. There are a wide range of tutorials to get you began with the basics after which progress on to more superior extraction projects. It's additionally straightforward to start on the free plan after which migrate up to the Standard and Professional plans as required. LinkedIn doesn't present a really comprehensive API that allows information analysts to get access to the info they require. If you have to entry any information in giant quantities, the one free choice obtainable to you is to scrape LinkedIn net pages utilizing automation bots known as LinkedIn Scraper. Helium Scraper comes with a degree and clicks interface that’s meant for coaching. To start a scraping course of and motion ensure you’re utilizing reliable scraping proxies as they will positively make or break a project. What occurs if the user doesn’t use proxies? ParseHub can also be probably the greatest LinkedIn scrapers out there now. ParseHub has been designed to allow data analysts to extract knowledge from internet pages without writing a single line of code. With Octoparse, you can convert web pages on LinkedIn right into a structured spreadsheet. A rotating proxy, then again, will change the scraper’s IP for every request. Proxycrawl holds an excellent number of scrapers of their scraping API stock with a LinkedIn scraper as one of such tools. With this, you can scrape plenty of data from LinkedIn starting from firm’s description and worker information, user profile info, and much more. Using Proxycrawl is as easy as sending an API request.

For knowledge that the automated identification system does not work for, you can make use of the point and click on interface. ScrapeStorm was constructed by an ex-Google crawler staff. It helps a number of data export method and makes the whole process of scraping LinkedIn easy. If that’s not sufficient, the person can take a look at paid plans for data scraping. ParseHub will make the relative selection on some pages from every name to one value. To repair this problem, simply click on on the name of thesecondproduct and the worth toguide ParseHubto perceive the data you should extract or scrape. Use Parsehub if you wish to scrape something like Amazon, Etsy, H&M, or any other online commercial retailer. If you know you aren't an skilled bot developer, you would possibly as nicely make use of one of many already-made LinkedIn scrapers mentioned below this part. However, in case you are able to take the problem, then you definitely may give it a attempt to see how simple/troublesome it's to bypass LinkedIn anti-bot checks. Once you've got built the scraping model to your specs, click the 'get knowledge' button on the bottom left of the principle command screen. Well, let me rephrase it, scraping LinkedIn is extremely onerous and even with the slightest mistake, you will be sniffed out and blocked in no time. This is as a result of LinkedIn has a really sensible system in place to detect and deny bot site visitors. You can scrape something from user profile information to business profiles, and job posting associated knowledge. With Helium Scraper extracting data from LinkedIn becomes simple – because of its intuitive interface. However, make sure it sends with your requests the required headers similar to User-Agent, Accept, Accept-Encoding, Accept-Language, and so on. Without sending headers of popular web browsers, Amazon will deny you entry – an indication you've been fished out as a bot. ParseHub is an intuitive and easy to study knowledge scraping tool. There are a wide range of tutorials to get you began with the basics after which progress on to more superior extraction projects. It's additionally straightforward to start on the free plan after which migrate up to the Standard and Professional plans as required. LinkedIn doesn't present a really comprehensive API that allows information analysts to get access to the info they require. If you have to entry any information in giant quantities, the one free choice obtainable to you is to scrape LinkedIn net pages utilizing automation bots known as LinkedIn Scraper. Helium Scraper comes with a degree and clicks interface that’s meant for coaching. To start a scraping course of and motion ensure you’re utilizing reliable scraping proxies as they will positively make or break a project. What occurs if the user doesn’t use proxies? ParseHub can also be probably the greatest LinkedIn scrapers out there now. ParseHub has been designed to allow data analysts to extract knowledge from internet pages without writing a single line of code. With Octoparse, you can convert web pages on LinkedIn right into a structured spreadsheet. A rotating proxy, then again, will change the scraper’s IP for every request. Proxycrawl holds an excellent number of scrapers of their scraping API stock with a LinkedIn scraper as one of such tools. With this, you can scrape plenty of data from LinkedIn starting from firm’s description and worker information, user profile info, and much more. Using Proxycrawl is as easy as sending an API request.

What Is A Proxy: Your Go-to Guide In 2020

- Any scraper will tell you that a successful operation is determined by having good proxies.

- For instance, if you are making an attempt to scrape Amazon product knowledge, you will make hundreds of connection requests to Amazon’s servers every minute.

- By simply clicking on one of many information points, every other one with the identical pattern might be highlighted – because of the intelligent sample detection of ParseHub.

- All that internet visitors will seem like an assault to Amazon.

These and many more will be discussed beneath. If the web sites to scrape are complicated or you want a lot of knowledge from a number of sites, this software could not scale nicely. You can think about using open supply internet scraping instruments to build your own scraper, to crawl the net and extract knowledge. Diffbot Automatic API makes the extraction of product information simple not only on Amazon but all every other e-commerce website. ScrapeStorm is an intelligent-based scraping tool that you should use for scraping LinkedIn. ScrapeStorm makes use of an automated information point detection system to establish and scraped the required information. LinkedIn is kind of in style as a supply of research information and as such, has got some competing scrapers you can for extracting data from LinkedIn. I acknowledged earlier that Scraping LinkedIn is troublesome. All of those IPs will come from the same metropolis, nation or location. If you're utilizing location-focused proxies, harvesting transport worth data from Amazon is straightforward. Helium Scraper provides simple workflow and ensures quick extraction in capturing complex data. When it comes to the amount of information that can be captured by Helium Scraper, that’s put at one hundred forty terabytes as that’s the quantity of information that may be held by SQLite. This will make your market analysis useless. If you might be utilizing datacenter proxies for your Amazon scraper – examine your outcomes manually to ensure you are on the right track. A scraper accesses giant units of pages or complete websites to compile knowledge for market analysis. When you're creating a product or introducing it to the market, this knowledge may as well be made of gold. Amazon is dominating on-line retail and has enough information for any complete market analysis. This is why scraping Amazon is on the minds of any bold marketer. Many smaller businesses either work underneath Amazon’s brand or attempt to compete with it. Your business cannot go up against Amazon when it comes to pricing data that you've got access to. Marketing agencies can use Amazon worth scraping strategies to collect information on relevant Amazon merchandise. Nevertheless, this strategy is dangerous, as a result of it goes towards Amazon’s terms of service.

How To Scrape Linkedin Using Python And Selenium

Even higher, the shopper assist is great. ParseHub has been a dependable and consistent web scraper for us for almost two years now. Setting up your initiatives has a little bit of a learning curve, however that's a small investment for the way highly effective their service is. Octoparse has an excellent number of options you want in an online scraper. Some of those include advanced web scraping features corresponding to proxy rotation, scheduled scraping, and a cloud-based mostly platform. Octoparse is a paid tool and good for its pricing. However, that individuals are fascinated in the publicly available information does not mean they will get it easily. Next, we’ll inform ParseHub to broaden the listing details before scraping it. First, we are going to add a brand new select command and select the “Read more concerning the area” link. Make certain to increase your new selections and delete the extraction of URLs. This way ParseHub will solely extract the data you’ve chosen and never the URLs they are linking to. We were one of the first prospects to join a paid ParseHub plan.

Pet Stores Email Address List & Direct Mailing Databasehttps://t.co/mBOUFkDTbE

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Our Pet Care Industry Email List is ideal for all forms of B2B marketing, including telesales, email and newsletters, social media campaigns and direct mail. pic.twitter.com/hIrQCQEX0b

Scraping Amazon Product Page

Various pricing levels are provided, but should you're keen to cap out at 200 pages and make your information public, you can register a free account. All e-commerce or online retail websites exhibit merchandise on search outcomes pages. With Parsehub you'll be able to snatch details about each product that's both on the search page and each product’s page. How you develop your scraper is dependent upon the data you require. First user will get clocked, IP blocked and the person has to wave scraping analysis bye. The second one, cash & enterprise is drowned. ParseHub software is available for customers having fairly a great free plan. ParseHub allows customers to scrape 200 pages in forty minutes and create 5 customized projects fast. We’ll click on Directors and the textual content we'd like extracted (on this case, Barry Sonnenfeld). This will immediate ParseHub to search for the word directors in every product’s web page and if discovered, scrape the name of the guy. For this project, we'll use ParseHub, a free and highly effective net scraper that can extract information from any website. For these causes, you need to choose residential proxies for Amazon scraping. These are IPs utilized by actual internet users, so they’re much tougher for Amazon to block. Residential proxies usually use backconnect servers which are simple to arrange and rotate. This lets you make tons of of connection requests without getting banned. Diffbot Automatic API will make your Amazon web scraping task easy – and you'll even combine it together with your utility. This Amazon scraper is straightforward to use and returns the requested information as JSON objects. Proxycrawl is an all-inclusive scraping resolution provider with a great number of products tailor-made in the direction of companies interested in scraping knowledge from the net. Among their Scraper API is an Amazon Scraper, which can be mentioned to be top-of-the-line Amazon scrapers out there.

Food And Beverage Industry Email Listhttps://t.co/8wDcegilTq pic.twitter.com/19oewJtXrn

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

But does LinkedIn helps the usage of automation bots and even web scraping in general? How easy is it attempting to scrape publicly available information on LinkedIn and what are the best LinkedIn scrapers out there? Another huge disadvantage is that datacenter IPs are available groups referred to as subnets. If one proxy will get banned, it could possibly take up to 264 IPs with it. The worst factor that can occur when Amazon detects a scrape, is it would start feeding the product scraper false info. When this happens, the Amazon product scraper will entry incorrect pricing info. Before utilizing ScrapeStorm, ensure you set it up in the proper way. It is highly effective and might help you with enterprise-grade scraping. It's the perfect tool for non-technical folks trying to extract information, whether that is for a small one-off project, or an enterprise type scrape running each hour. To choose the actual name of the director, we’ll use the Relative Select command. By simply clicking on one of the data points, every other one with the same sample shall be highlighted – because of the intelligent sample detection of ParseHub. Any scraper will inform you that a successful operation is dependent upon having good proxies. For instance, in case you are trying to scrape Amazon product information, you'll make hundreds of connection requests to Amazon’s servers each minute. If you do this from your personal IP, you will get blocked on Amazon immediately. All that internet site visitors will look like an assault to Amazon. We have been initially attracted by the fact that it may extract information from web sites that other similar companies could not (primarily due to its highly effective Relative Select command). The group at ParseHub have been helpful from the start and have always responded promptly to queries. Over the previous few years we have witnessed nice enhancements in both functionality and reliability of the service. We use ParseHub to extract relevant data and include it on our travel website. This has drastically reduce the time we spend on administering tasks relating to updating knowledge. You additionally need to cope with the difficulty of always upgrading and updating your scraper as they make changes to their web site layout and anti-bot system to break current scrapers. Captchas and IP blocks are additionally a significant problem, and Amazon uses them so much after a number of pages of scraps. Do you need to scrape Amazon your self and keep away from paying the high charges labeled on prepared-made Amazon scrapers out there? Then you should know that you've got lots to cope with. Well, Amazon can return the 200 status code and still returns an empty response.